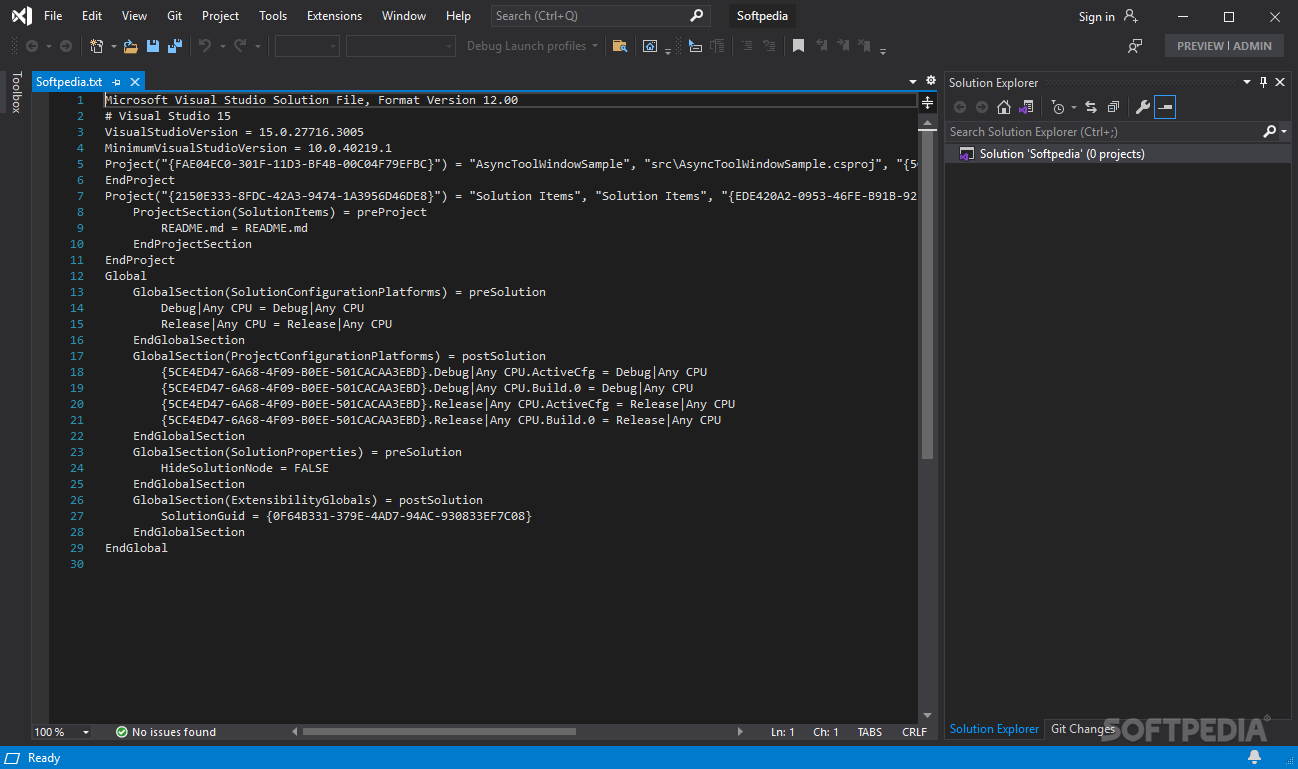

Loading it all into RAM seems not very appropriate to me. I used to admonish people in the VS org to think about solutions with say 10k projects (which exist) or 50k files (which exist) and consider how the system was supposed to work in the face of that. That doesn’t seem to have changed since 2010. My favorite to complain about are the language services, which notoriously load huge amounts of data about my whole solution so as to provide Intellisense about a tiny fraction of it. In the VS space there are huge offenders. Now if you have a package that needs >4G of data *and* you also have a data access model that requires a super chatty interface to that data going on at all times, such that say SendMessage for instance isn’t going to do the job for you, then I think maybe rethinking your storage model could provide huge benefits. But Rico Mariani argues that the solution isn’t to give VS more memory, but rather make it use less. That isn’t to say Visual Studio can’t be improved. Dragging all of VS kicking and screaming into the 64-bit world just doesn’t make a lot of sense. This was possible in VS 2008, maybe sooner. Any packages that really need that much memory could be built in their own 64-bit process and seamlessly integrated into VS without putting a tax on the rest. Most of Visual Studio does not need and would not benefit from more than 4G of memory. And you didn’t need the extra memory anyway. Even other programs on your system that have nothing to do with the code you’re running will suffer. Your processor's cache did not get bigger. You will fit less useful information into one cache line, code and data, and you will therefore take more cache misses. Your pointers will get bigger your alignment boundaries get bigger your data is less dense equivalent code is bigger.

With an application such as Visual Studio that work with large, complex data structures, the 64-bit pointer overhead dwarfs the benefits of more registers. While you can benefit from having access to more CPU registers, that mostly benefits applications that are doing heavy number crunching on large arrays. This may seem counter-intuitive, but shift from 32-bit to 64-bit isn’t an automatic win. Rather than effort or opportunity cost, the primary reason is performance. For a long time now developers have been asking why Visual Studio hasn’t made to switch to 64-bit.

0 kommentar(er)

0 kommentar(er)